Stop Manually Updating Your SDKs

A developer spent three hours manually updating 47 files to add security headers to their OpenAI calls. They missed file #48. File #48 was the one that got breached.

This is the Integration Gap. Security tools often fail not because they are bad, but because they are hard to install.

If you have to manually find every new OpenAI() call in your 100,000-line codebase and wrap it in a try-catch block, you are going to make a mistake.

The "Zero-Code" Philosophy

We realized that if we wanted developers to actually use PromptGuard, the friction had to be zero. "Read the docs and copy-paste this snippet" is too much friction.

So we built a CLI that modifies your code for you. Safely.

How It Works (AST, not Regex)

We didn't just sed replace strings. That's how you break production.

We built an AST (Abstract Syntax Tree) transformer.

When you run:

promptguard secure .The CLI:

- Parses your code into an AST (using

tree-sitter). - Identifies LLM client instantiations (OpenAI, Anthropic, LangChain).

- Injects the

base_urlandheadersconfiguration directly into the constructor. - Preserves your comments, formatting, and weird indentation.

Safety First

We know "automatic code modification" sounds scary. That's why:

- Dry Run by Default: It shows you a diff of exactly what will change.

- Instant Rollback: It creates a backup. One command (

promptguard revert) undoes everything. - Local Only: Your code never leaves your machine. The CLI runs locally.

The Result

You can secure a monolithic repo with 500+ LLM calls in about 4 seconds.

$ promptguard secure src/

> Found 512 OpenAI instances.

> Secured 512 instances.

> 0 errors.

> Time: 3.2sSecurity shouldn't be a chore. It should be a command.

READ MORE

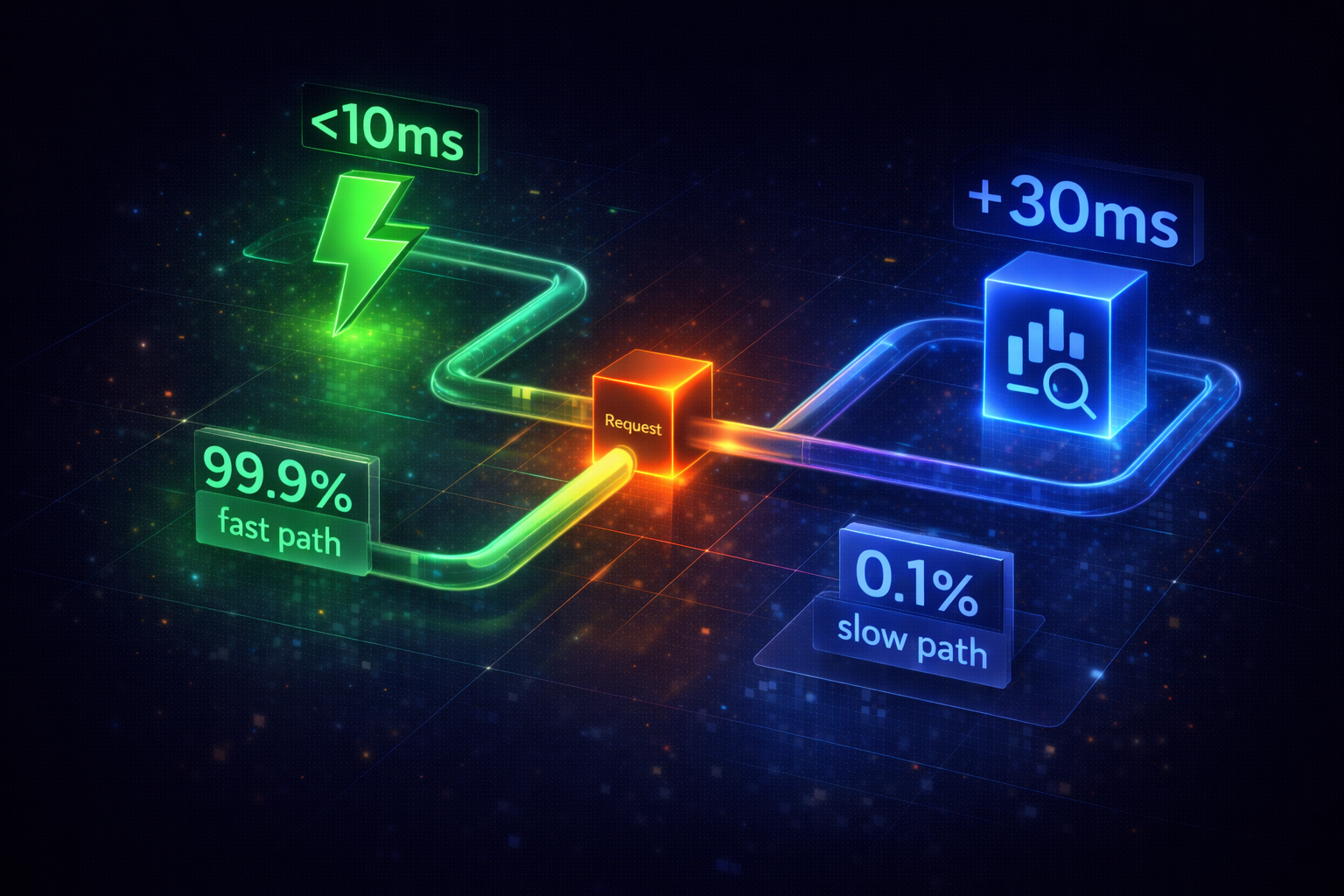

How We Built a Sub-10ms AI Firewall

Security is useless if it destroys your latency. Here is the engineering story of how we optimized PromptGuard's hybrid architecture to inspect prompts in 8ms.

The Physics of Latency: Why We Don't Use LLMs to Secure LLMs

Everyone wants AI security, but nobody wants to add 500ms to their request. Here is why we bet on classical ML and Rust for our detection engine.

Why We Self-Host Our Security Stack (And You Should Too)

Sending your data to a security vendor is an oxymoron. If you care about privacy, your security tools should run in your VPC.