The Physics of Latency: Why We Don't Use LLMs to Secure LLMs

There is a popular architecture for AI security that goes like this:

- User sends prompt.

- Middleware sends prompt to GPT-4 with

"Is this safe?". - GPT-4 says

"Yes". - Middleware sends prompt to your actual model.

This architecture is dead on arrival.

The Math Doesn't Work

- Your Model: 500ms (Time to First Token).

- Security Model: 500ms.

- Total Latency: 1s+.

You just doubled your latency. For a voice agent or a real-time copilot, that is unacceptable.

The Hybrid Architecture

We set a budget: 10ms. To hit that, we had to get off the LLM train.

1. The "Dumb" Models (0.5ms)

We run specialized classifiers (XGBoost/Linear) on simple features:

- Prompt length.

- Character distribution.

- Known malicious n-grams.

These catch the "script kiddie" attacks instantly.

2. The Transformers (8ms)

We fine-tuned DeBERTa-v3-small (a 40MB model) on our attack dataset. It runs on CPU. It fits in L3 cache. It understands semantics ("Ignore instructions" vs. "Translate instructions") but is 100x faster than GPT-4.

3. The Verifier (Async)

If a prompt is suspicious (confidence 0.6-0.8), we don't block it immediately if the risk is low. We let it through, but we asynchronously send it to a larger model for analysis. If it turns out to be an attack, we ban the user after the fact.

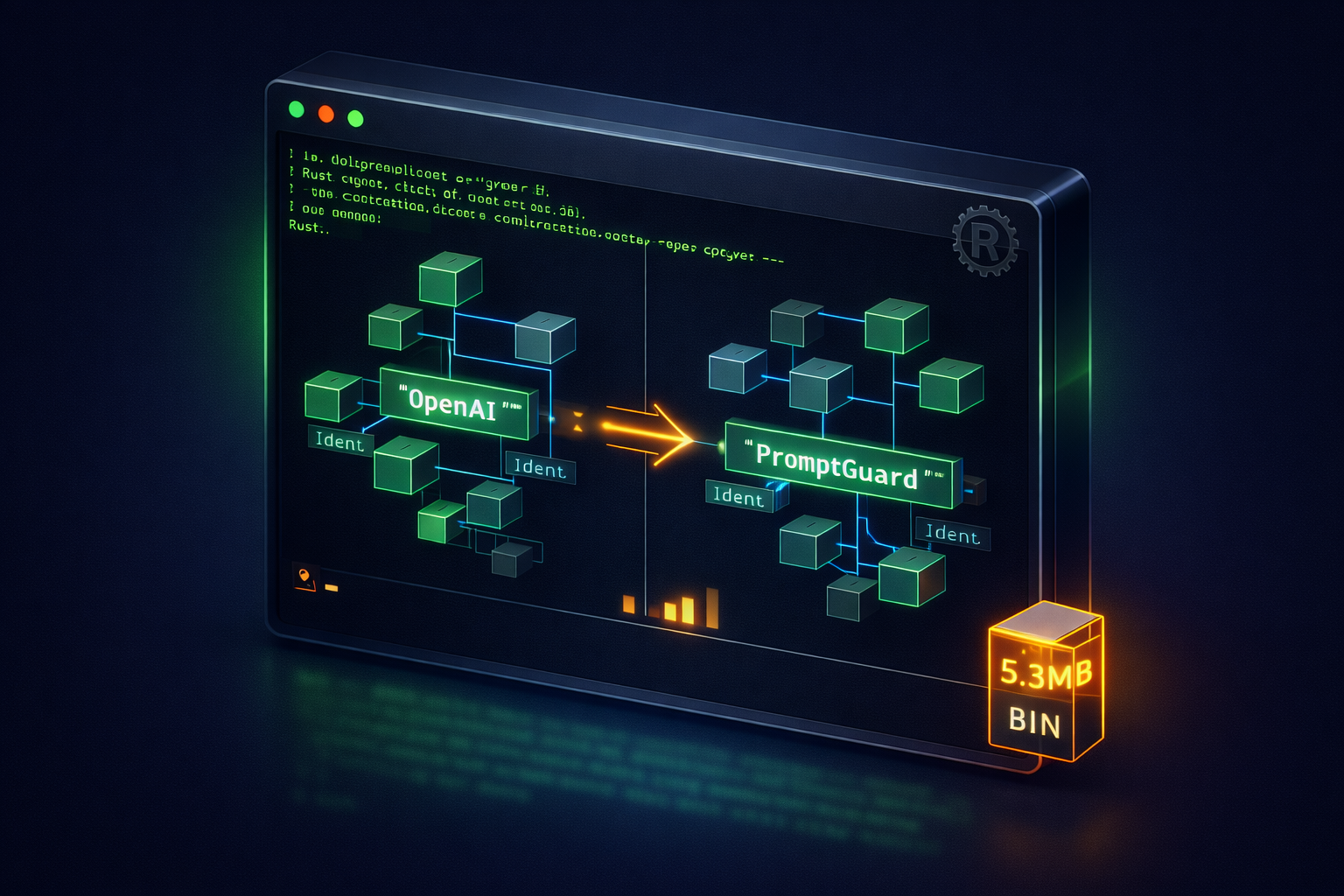

Why Rust?

We wrote the core proxy in Python first. We hit the GIL wall at 500 concurrent requests. We rewrote the hot path in Rust.

- Memory Safety: Zero segfaults.

- Concurrency: Tokio handles 10k connections/sec.

- Python Interop: We bind the Rust core to Python via PyO3 so we can still use the ML ecosystem.

Conclusion

You don't fight fire with fire. You fight fire with water. You don't secure LLMs with more LLMs. You secure them with engineering.

READ MORE

How We Built a Sub-10ms AI Firewall

Security is useless if it destroys your latency. Here is the engineering story of how we optimized PromptGuard's hybrid architecture to inspect prompts in 8ms.

Why Support Bots Are Your Biggest Security Hole (And How We Fix It)

We've seen how easy it is to trick a helpful bot into leaking user data. Here is the architecture we recommend to prevent it without killing the user experience.

Stop Manually Updating Your SDKs: Why We Built a CLI

Security that requires manual code changes is security that gets skipped. Here is why we built a CLI to secure codebases automatically.