How We Built a Sub-10ms AI Firewall

When we started PromptGuard, we set a hard constraint: Max 10ms P50 latency.

If you are adding a security layer to an LLM app, you are already dealing with the slow inference times of GPT-4 or Claude. You cannot afford to add another 500ms round-trip for a security check.

This constraint forced us to abandon the standard "Let's just ask another LLM if this is safe" approach. Instead, we had to get creative.

The Latency Budget

10ms is tight.

- Network overhead: ~2-3ms

- JSON parsing/serialization: ~1ms

- Remaining budget for logic: ~6ms

You can't run a 70B parameter model in 6ms. You can barely run a database query.

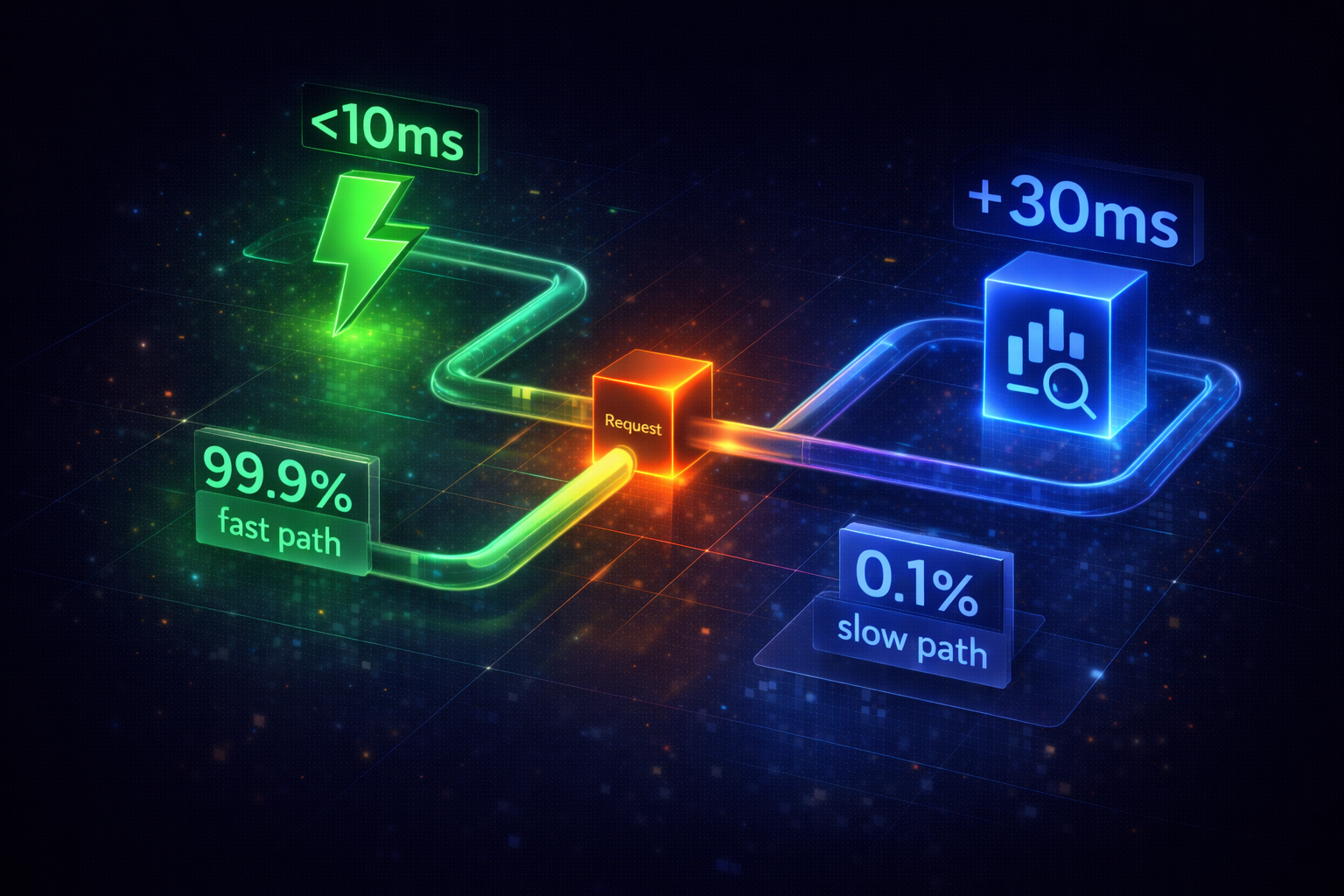

The Architecture: Fast Path vs. Slow Path

We settled on a Hybrid Hierarchical Architecture.

1. The Fast Path (The 99% Case)

We realized that 99% of requests are either obviously safe or obviously malicious. We didn't need a reasoning engine to detect them; we needed pattern recognition.

We deployed DeBERTa-v3-small models fine-tuned specifically for:

- Prompt Injection

- PII (Named Entity Recognition)

- Toxicity

These models are small (under 100MB). But running them in Python for every request was still too slow due to the GIL and overhead.

Optimization: We quantized these models to ONNX and ran them via onnxruntime with aggressive caching.

- Result: ~6ms inference time on CPU.

2. The Semantic Cache (0ms)

The fastest code is code that doesn't run. We implemented a semantic caching layer using vector embeddings. If User A sends a prompt that is semantically identical (0.99 cosine similarity) to a prompt we scanned yesterday, we return the cached result instantly.

This hit rate is surprisingly high (30-40%) for production apps where users ask repetitive questions.

3. The Slow Path (The "Verify" Step)

If the Fast Path models return a confidence score between 0.4 and 0.8 (the "unsure" zone), we fall back to a larger model. This is where we pay the latency cost (~150ms). But because this only happens for under 1% of traffic, the amortized latency remains extremely low.

The Deployment Stack

We needed this to be self-hostable, so we couldn't rely on proprietary cloud infrastructure (like AWS Lambda warmers).

- API Layer: Python (FastAPI). We considered Rust, but the ML ecosystem in Python is just too good. We used

uvloopto squeeze every drop of performance out of asyncio. - Vector Store: Redis. We needed sub-millisecond lookups. Postgres/pgvector was too slow for the hot path.

- Queue: None. Everything is synchronous in the Fast Path. Queues add latency.

Lessons Learned

1. Tokenizers are Slow

We found that tokenizing the input text was taking 30% of our total request time.

Fix: We switched to the Rust-based tokenizers library and pre-warmed the tokenizer in memory.

2. Batching doesn't help latency

Batching increases throughput but hurts latency. Since our goal was minimizing the time per request, we disabled batching for the real-time API. Each request is processed immediately.

Conclusion

You don't need a massive LLM to do security. By combining classical ML (BERT/DeBERTa), semantic caching, and rigorous systems engineering, you can build a security layer that feels invisible.

READ MORE

The Physics of Latency: Why We Don't Use LLMs to Secure LLMs

Everyone wants AI security, but nobody wants to add 500ms to their request. Here is why we bet on classical ML and Rust for our detection engine.

LangChain Is Unsafe by Default: How to Secure Your Chains

LangChain makes it easy to build agents. It also makes it easy to build remote code execution vulnerabilities. Here is the right way to secure your chains.

Why Support Bots Are Your Biggest Security Hole (And How We Fix It)

We've seen how easy it is to trick a helpful bot into leaking user data. Here is the architecture we recommend to prevent it without killing the user experience.