The Cost of False Positives

In security, there is an old saying: "It's better to block a legitimate user than let a hacker in."

In the AI world, that is wrong. If you block a legitimate user who is trying to use your chatbot, they don't file a ticket. They just leave. They think your AI is "broken" or "dumb."

False positives are the silent killer of AI adoption.

The "Ignore Previous Instructions" Paradox

The phrase "Ignore previous instructions" is the classic prompt injection attack. It is also a phrase used by:

- Lawyers ("Ignore previous instructions regarding the contract...")

- Teachers ("Ignore previous instructions for the essay...")

- Developers debugging code.

If you regex-block that phrase, you break the app for lawyers, teachers, and devs.

Our Approach: Context is King

We learned that you cannot classify a prompt based on keywords alone. You need Contextual Awareness.

We realized our classifiers were failing on fictional contexts.

- Prompt: "Write a story about a hacker who types 'drop table users'."

- Old Classifier: BLOCKED (SQL Injection detected).

- User Reaction: "This AI is annoying."

We had to retrain our models to understand Intent vs. Content. The content is SQL injection. The intent is creative writing.

The Troubleshooting Loop

When a false positive happens (and they still do, about 0.01% of the time), we have a dedicated "Flight Recorder" process.

- The Log: Every block captures the full prompt context.

- The Replay: We feed the failed prompt into our "Shadow Mode" model (a more expensive, slower model).

- The Diff: If Shadow Mode says "Safe" but Fast Mode said "Block," we auto-flag it for dataset labeling.

- The Retrain: Every Friday, we fine-tune the Fast Mode model on the week's false positives.

Results

We reduced our false positive rate from 2.4% (unusable) to 0.01% (enterprise grade). It wasn't magic. It was just a relentless focus on the data.

If you are building your own filters, remember: Log your blocks. If you aren't looking at what you blocked, you have no idea who you are annoying.

READ MORE

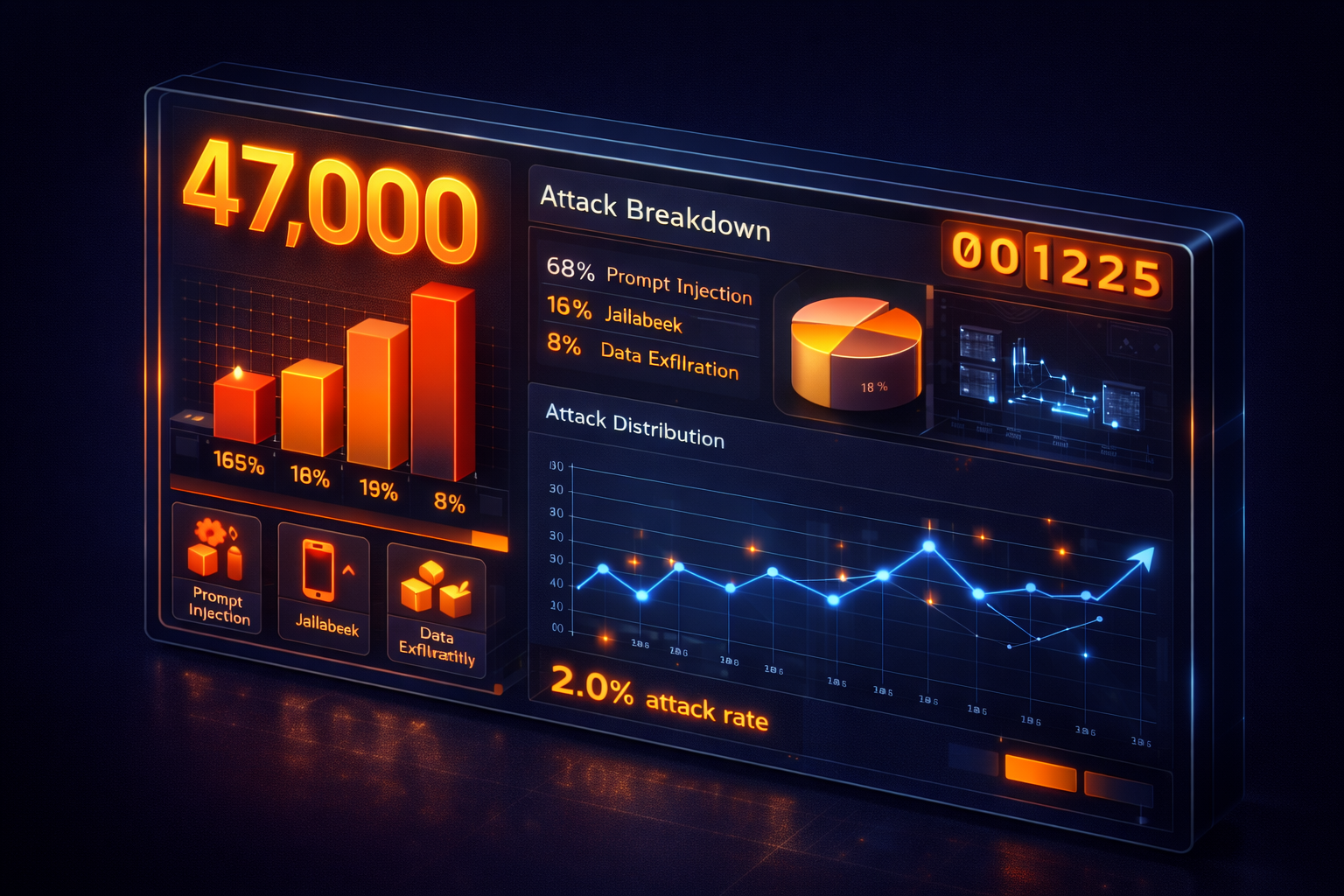

What 100 Million Attacks Taught Us About AI Security

We analyzed the last 100M requests blocked by PromptGuard. The data surprised us. It's not hackers—it's regular users trying to break your rules.

LangChain Is Unsafe by Default: How to Secure Your Chains

LangChain makes it easy to build agents. It also makes it easy to build remote code execution vulnerabilities. Here is the right way to secure your chains.

PCI-DSS for AI: Don't Let Your Chatbot Touch Credit Cards

If your AI agent sees a credit card number, your entire compliance scope just exploded. Here is how to keep your PCI audit boring.